The Rise of Digital Helpers

In today’s fast-paced digital landscape, chatbots and virtual assistants have become essential parts of everyday life. From asking Siri about the weather to chatting with customer support bots on e-commerce sites, these intelligent systems are everywhere. But have you ever wondered how they seem to get smarter the more you interact with them?

The answer lies in a fascinating intersection of artificial intelligence (AI), machine learning (ML), and natural language processing (NLP). These technologies enable chatbots and virtual assistants to not only respond to queries but also learn from interactions, adapt to user preferences, and deliver increasingly personalized experiences.

This article explores how chatbots and virtual assistants learn, how their training works, and how they evolve over time with the help of data from users like you. Whether you’re a tech enthusiast or a curious beginner, understanding these digital companions can help you better use them—and perhaps even build one of your own.

What Are Chatbots and Virtual Assistants?

Though often used interchangeably, chatbots and virtual assistants have different roles:

- Chatbots are rule-based or AI-powered software programs designed to simulate conversation. They’re commonly used in customer service, websites, and messaging platforms.

- Virtual assistants go further—they not only chat but also perform actions like setting reminders, sending messages, making calls, or integrating with smart home devices. Examples include Amazon’s Alexa, Apple’s Siri, and Google Assistant.

Both are powered by algorithms that improve as they are exposed to more data—especially your data.

Foundations: The Learning Process

1. Pre-training

Before interacting with users, most chatbots and virtual assistants undergo a pre-training phase. This is where machine learning models are fed massive amounts of existing data—conversations, text from books, websites, and forums. The goal is to teach them grammar, syntax, and general knowledge.

For example, OpenAI’s GPT models (like the one you’re reading now) were trained on a wide range of internet text. This gave the model a broad understanding of language and context before it ever had a real-time conversation.

2. Fine-tuning

Once a base model is trained, developers fine-tune it for specific tasks or domains:

- A medical chatbot will be fine-tuned with medical terminology.

- A customer support bot will learn about product catalogs, return policies, and FAQs.

This ensures the chatbot isn’t just “smart”—it’s relevant to its environment.

3. Live Learning (User Interaction)

Here’s where you come in. As you interact with a chatbot or assistant:

- It records your preferences, like language, tone, and topics.

- It learns your habits—what time you usually ask questions, what kind of music you like, or how you phrase commands.

- It adapts responses based on past interactions.

Over time, this creates a personalized and more efficient experience.

Some assistants even use reinforcement learning—where positive feedback (like continued use or thumbs-up ratings) helps them reinforce good behavior and avoid mistakes.

These learning processes are vital for building systems that not only function well but also delight users by anticipating their needs and communicating in a way that feels natural.

Key Technologies Behind the Magic

Natural Language Processing (NLP)

NLP helps bots understand the structure and meaning of human language. This includes:

- Tokenization: Breaking down sentences into words.

- Intent recognition: Figuring out what the user wants (e.g., “Book me a flight”).

- Entity extraction: Identifying important details like dates, places, or names.

Advanced NLP allows bots to detect subtleties in language, handle multi-turn conversations, and disambiguate similar queries. This enables more accurate and human-like responses.

Machine Learning (ML)

ML algorithms use data to make predictions. The more data they have, the better they perform. Chatbots use ML to:

- Learn from previous conversations.

- Predict the most helpful or relevant response.

- Continuously update their models.

With ML, bots move from static scripts to dynamic experiences where responses evolve based on user behavior and preferences.

Context Awareness

More advanced assistants keep track of context:

- If you say “What’s the weather like?”, and then “How about tomorrow?”, the assistant knows you’re still talking about the weather.

- They use context windows to understand back-and-forth conversation.

Context awareness is essential for making interactions feel seamless and intuitive, rather than fragmented or robotic.

Sentiment Analysis

Some systems assess emotional tone. If you’re frustrated, the assistant might respond more sympathetically. This is particularly important in customer service environments.

Sentiment-aware bots can adjust tone, suggest helpful resources, or escalate the conversation to a human when needed.

Real-World Examples of Learning in Action

1. Netflix and Spotify

While not chatbots in the traditional sense, both services use similar principles. As you listen or watch, their algorithms learn your tastes and refine recommendations.

These platforms rely on collaborative filtering and deep learning models to predict what you’ll enjoy based on your behavior and the preferences of users with similar habits.

2. Google Assistant

It learns from your search history, emails (with permission), calendar, and voice patterns. Ask it to “call mom,” and it knows who that is based on your contacts.

Its ability to understand commands in context and carry out multi-step tasks makes it a strong example of modern AI-powered assistance.

3. E-commerce Chatbots

Many online stores use bots that track your browsing and purchase history. They remember:

- Your size

- Your last search

- Products you added to your cart

Next time, they may proactively suggest deals or restocks.

This personalization can increase conversions and improve customer satisfaction by simplifying the shopping experience.

4. Smart Homes

Assistants like Alexa learn your routines. If you always say “Good night” at 10 PM, it might suggest automating lights and alarms.

They can also recognize voice commands from multiple household members, tailor responses, and even make proactive suggestions based on usage patterns.

Privacy and Data Concerns

While personalization enhances user experience, it raises important ethical questions:

- What data is collected?

- Who has access to it?

- Can you opt out or delete your history?

Companies are increasingly adding features that let users manage privacy settings. It’s wise to:

- Review permissions

- Delete old voice recordings

- Turn off features you don’t use

Transparency and control are becoming core features of responsible AI.

Regulatory Shifts

Governments worldwide are introducing regulations like GDPR (Europe) and CCPA (California) to protect users’ digital rights. These rules push companies to be more accountable in how they collect, use, and store data.

Challenges in Learning and Adaptation

Ambiguity

Human language is messy. The same phrase can mean different things depending on context. For example, “That’s sick!” could be an insult or a compliment.

Bots must use NLP and historical context to reduce errors and respond accurately.

Accents and Dialects

Voice assistants sometimes struggle with regional accents or slang. Developers work hard to expose them to diverse speech samples.

Efforts are ongoing to make speech recognition systems more inclusive and representative.

Bias in Training Data

If the data used to train a model includes stereotypes or biased language, the chatbot may unknowingly replicate those biases. Ethical AI development involves careful monitoring and filtering of training data.

Bias mitigation techniques include diversifying training sources, human review, and fairness audits.

Sarcasm and Humor

Bots still struggle with sarcasm, irony, and complex humor. These require not just language understanding but emotional intelligence and cultural context.

Ongoing research in computational linguistics aims to close this gap and make bots more socially aware.

How You Can Train Your Assistant

Even if you’re not a developer, your behavior shapes how these systems learn.

Tips:

- Be consistent: Use the same voice commands for better recognition.

- Correct mistakes: If the assistant misunderstands, correcting it helps future accuracy.

- Use feedback tools: Many assistants let you rate responses. Take advantage.

- Explore settings: Train your preferences (news sources, music apps, etc.).

- Update regularly: Improvements and bug fixes come through software updates.

Being proactive can lead to smoother, more customized interactions over time.

The Future of Learning Bots

We’re entering a future where bots won’t just react—they’ll anticipate. Imagine:

- Predictive assistants that book flights based on calendar entries.

- Emotionally aware chatbots that adapt their tone to your mood.

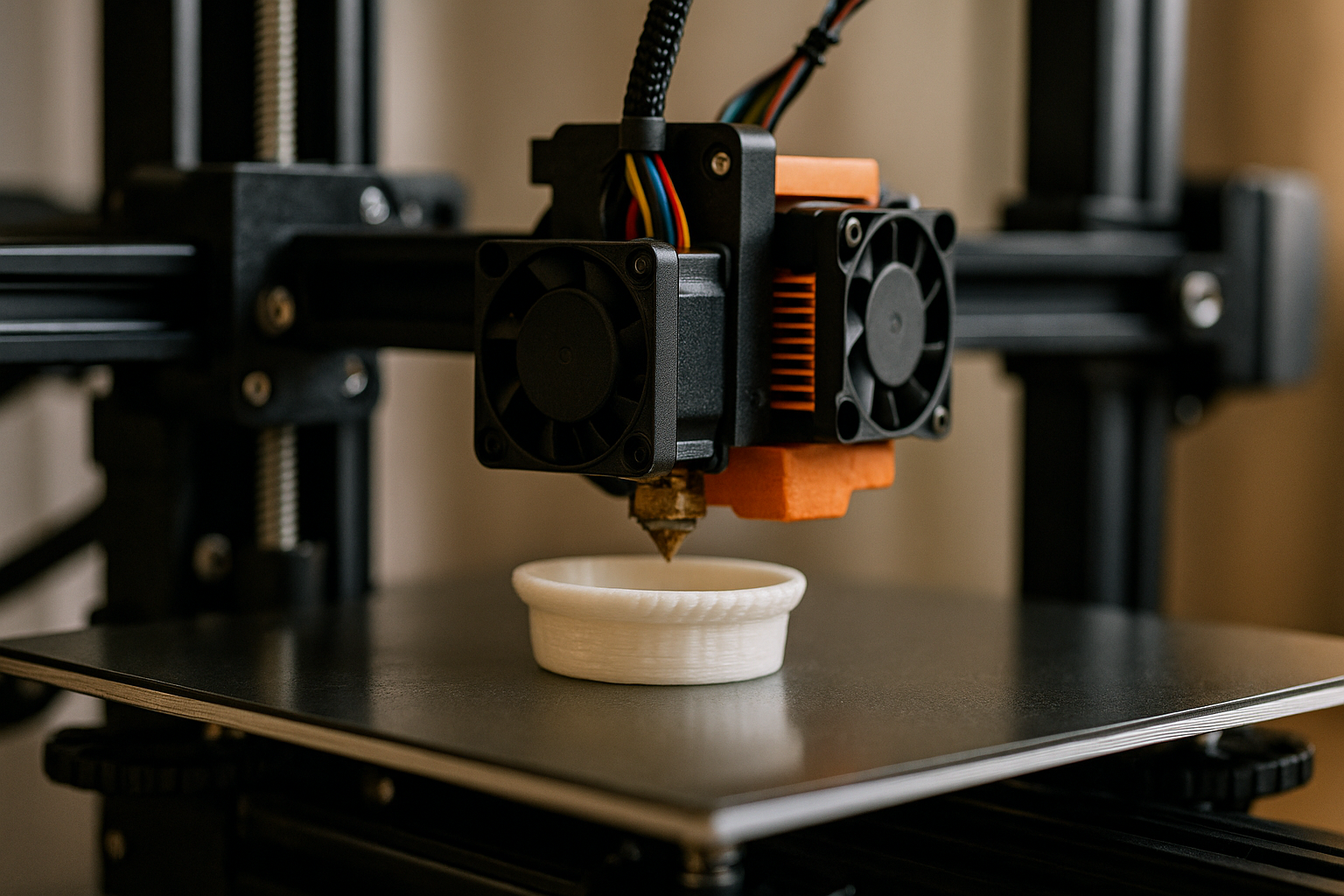

- Multimodal understanding, combining text, voice, image, and even gestures.

Integration with IoT

Bots will link seamlessly with Internet of Things (IoT) devices:

- Your fridge orders groceries.

- Your car suggests routes based on your habits.

- Your smartwatch gives health updates to your assistant.

Cross-platform Intelligence

Future systems will learn across platforms. What you ask on your smart speaker may influence suggestions on your phone or laptop.

This interconnectedness could create a more holistic and responsive digital environment tailored to your lifestyle.

Should You Build Your Own?

With platforms like Dialogflow, Microsoft Bot Framework, and Rasa, building a basic chatbot has never been easier. You can:

- Add bots to websites for customer support.

- Automate responses on social media.

- Create internal tools for your team.

Open-source libraries and no-code tools now allow even non-developers to design smart, engaging bots with minimal resources.

If you’re technically inclined, exploring chatbot development is a rewarding experience—combining AI, UX, and creativity.

Final Thoughts: From Tools to Companions

Chatbots and virtual assistants are evolving from simple Q&A tools into intuitive digital partners. As they learn from us, they become more helpful, relevant, and engaging.

But it’s not a one-way street. As users, we also learn how to interact more effectively, set boundaries, and leverage these tools to make life easier.

Whether you’re using Alexa to control your home, asking Google to plan your day, or chatting with customer service online, remember: every interaction teaches the system—and shapes your experience.

In this age of personalized AI, your voice doesn’t just matter—it literally programs the future. And as these technologies continue to evolve, we move closer to a world where digital assistants become indispensable collaborators in our daily lives.